We wanted to build an application based on the Writeback capability of Microsoft. The dream was to have a cube with multiple dimension allowing our users to analyze data by slicing / dicing this cube and based on their analysis make changes to the numbers at various levels.

Once they were happy with the data post changes we were to flow this data to a downstream system. It was to be a highly interactive system for a reasonably competent user to plan target setting by carrying out what if analysis on the existing data.

The front end for the user was Excel 2007 as the navigation / user interface was phenomenon and all our users in our company were fairly competent in Excel.

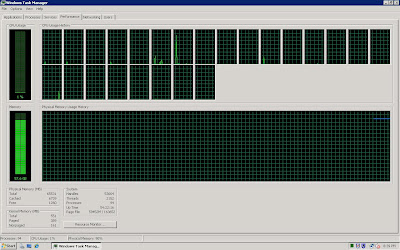

The path we started down was to have SQL Server Analysis Services 2005 as the backend sitting on a Hexacore 4 processor Windows Server 2008 box. We then enabled the cube for Writeback. The way this works according to me at a high level is that whatever numbers you choose to writeback, it does not make the change directly to your cube, what it does however is to create a writeback partitition that stores the net off data so that you could see the required number on your front end.

Let me explain that a little more with an example, lets say for one depot for one product the value initially was 100. As part of the writeback lets say you want it to be 70. What actually happens is that an entry takes place in the writeback partition for that depot and that product as -30. Based on a join of these two the number dispayed is 70.

While this works perfectly for small sets of data, it fails miserably for larger sets. The solution becomes impractical and very sluggish if the writeback is at an aggregate level and affecting large number of leafs / touples. Post engaging with Microsoft Consulting we confirmed that this was not going to work out for the application we had in mind.

OLAP Writeback sucks, period !!! and even Microsoft didn't have a response :) Now just because the writeback using the writeback partitition is hopeless it does not mean that its the only way to writeback user inputs into the cube.

The path we finally deployed our solution was a combination of the following.

VBA ( Macro coding) / Web services / SQL Server 2008 Integration services and SQL Server 2008 Analysis Services.

The excel that was shared with the users had a custom built macro code that essentially looped through the page / row / column fields to gather the user selections. Care had to taken to be able to loop through both the dimension member as well as the attributes. ( This is feature of SQL Server 2005 + , do read up if you are unaware) Also note it gets more complicated in Pivot 12 as there is extended capability in terms of Value and Label filters, both of which needs to be captured as well incase someone has applied them.

Once all the filters are looped and the details captured, the macro uses this data to frame a create sub cube query that gets passed to the server using a web service. This sub cube that gets created on the fly contains the entire data from the main cube that needs to undergo the writeback / change.

The next step is to strip this newly created sub cube to retrieve the underlying rowset of data that makes up the cube. Once we have that into a table, we have the option of applying pretty much any rule on this data, disaggregation / static values / percentage of existing value / percentage of another value.

Post applying the rule, the data gets updated into the base cube data and an incremental process is carried out and voila you have implemented your writeback.

Just to share some of the numbers, we were able to carry out a writeback affecting 3 Lakh records ( Thats three hundred thousand records :) in India) in a little over 2 minutes with the majority of the time in the incremental processing. This ofcourse will depend on the size and complexity of your own cube.

If your curious about the rest of the design, well since we had the changes in the table we could build an SSIS package to incrementally flow this information down stream into a DB2 environment using OLE/DB2 providers which ofcourse has its own problems in life :)